Second, it might be list of hour:minutes pairs delimited by comma. First, it could be "h1" (every hour), "h2" (every two hours), "h3", "h4", "h6" or "h12" Example: /wiki cron TEXTID h4. /wiki cron TEXTID TIME - configure posting time(s) for TEXTID text.Here TEXTID could be any alphanumeric token like "rules", "faq", "text44". /wiki setmsg TEXTID - use this command in reply to message you want to remember as TEXTID./wiki config - see bot configuration for current chat.Admins list is collected once and cached. /wiki reload_admins - ask bot to reload admin IDs for current chat./wiki check - check if bot is installed correctly.Give bot a permission to delete messages. In any time you can also display text using its ID. You can setup multiple text and configure specific post time for each text. Email me at and we can talk stuff out.Wiki Robot can post text to chat on scheduled time. I'm open to anyone contributing, especially if they know of a way to make this faster or take up less drive space for locally stored subcategories. If you're using Atom to edit your code, I recommend using atom-linter-mypy to do type linting. This project uses type annotations and mypy type checking, so you can be sure you are passing the right types to functions. If not, it removes that page from the category list and loops on. 5: half of all A's should be subcategories of parent category) then it is a valid subpage. If it is >= than a prespecified value (Default is. It then checks if A belongs to the subcategories generated by the 'parent' category, and computes a 'score' of that page. Then it loops through all of the found categories using a variable I will call A here. To determine how similar a page is to a category, the program first enumerates what categories the page selected belongs to. For speeds sake, after gathering all subcategories from a given parent category the program optionally saves all of them to a text file to find subcategories faster.

Wikibot everipedia code#

Īnd the maximum tree depth was 3, then the code would stop gathering subcategories for Category C,D,E.Īfter all subcategories of a given parent category have been amassed in some list L, the program randomly chooses a category C from L, finds the pages belonging to C, chooses a random page P from C and return the URL pointing to P. if a subcategory chain wentĬategory A -> Category B -> Category C -> Category D ->. The bulk of my code focuses on iteratively getting the subcategories at a given depth in a tree, adding them to an array with all subcategories of a given 'parent' category, and continuing on in that fashion until there are no more subcategories or the program has fetched to the maximum tree depth allowed. The most important part of this program is the Wikipedia API it allows the program to gather all of the subcategories of a given category in a fast(ish) and usable manner, and to get the pages belonging to a given category. More info available by using help(wikiBot) How It Works If you're using it in your own Python code the best way to set it up is from wikiBot import WikiBot wb = WikiBot ( If you want a higher similarity value then you might sacrifice other valid pages in

Use a tree_depth of 3 or 4, more than 4 will bring loosely relates categories into subcategories.

c, -check After finding page check to see that it truly fits in r, -regen Regenerate the subcategory file s, -save Save subcategories to a file for quick re-runs What percent of page categories need to be in How far down to traverse the subcategory tree h, -help show this help message and exit Get a random page from a wikipedia categoryĬategory The category you wish to get a page from. Python wikiBot.py -h shows the usage of the program. Make sure you have Pip installed! git clone

Wikibot everipedia install#

InstallationĪll you need to do it clone this repo and install the dependencies. Welcome to WikiBot! This is a small program to get a random page from a Wikipedia category AND it's subcategories (up to a specified depth).

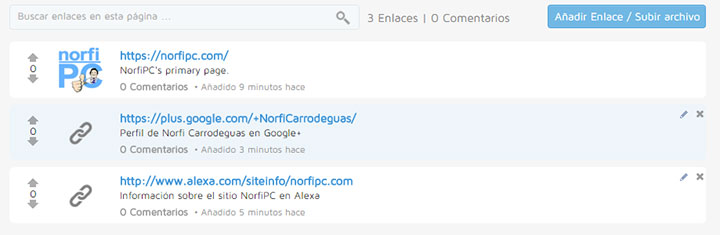

0 kommentar(er)

0 kommentar(er)